1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

| # -*- coding: utf-8 -*-

"""

Created on Sat Dec 5 23:31:22 2015

@author: bitjoy.net

"""

from os import listdir

import xml.etree.ElementTree as ET

import jieba

import sqlite3

import configparser

class Doc:

docid = 0

date_time = ''

tf = 0

ld = 0

def __init__(self, docid, date_time, tf, ld):

self.docid = docid

self.date_time = date_time

self.tf = tf

self.ld = ld

def __repr__(self):

return(str(self.docid) + '\t' + self.date_time + '\t' + str(self.tf) + '\t' + str(self.ld))

def __str__(self):

return(str(self.docid) + '\t' + self.date_time + '\t' + str(self.tf) + '\t' + str(self.ld))

class IndexModule:

stop_words = set()

postings_lists = {}

config_path = ''

config_encoding = ''

def __init__(self, config_path, config_encoding):

self.config_path = config_path

self.config_encoding = config_encoding

config = configparser.ConfigParser()

config.read(config_path, config_encoding)

f = open(config['DEFAULT']['stop_words_path'], encoding = config['DEFAULT']['stop_words_encoding'])

words = f.read()

self.stop_words = set(words.split('\n'))

def is_number(self, s):

try:

float(s)

return True

except ValueError:

return False

def clean_list(self, seg_list):

cleaned_dict = {}

n = 0

for i in seg_list:

i = i.strip().lower()

if i != '' and not self.is_number(i) and i not in self.stop_words:

n = n + 1

if i in cleaned_dict:

cleaned_dict[i] = cleaned_dict[i] + 1

else:

cleaned_dict[i] = 1

return n, cleaned_dict

def write_postings_to_db(self, db_path):

conn = sqlite3.connect(db_path)

c = conn.cursor()

c.execute("'DROP TABLE IF EXISTS postings'")

c.execute("'CREATE TABLE postings(term TEXT PRIMARY KEY, df INTEGER, docs TEXT)'")

for key, value in self.postings_lists.items():

doc_list = '\n'.join(map(str,value[1]))

t = (key, value[0], doc_list)

c.execute("INSERT INTO postings VALUES (?, ?, ?)", t)

conn.commit()

conn.close()

def construct_postings_lists(self):

config = configparser.ConfigParser()

config.read(self.config_path, self.config_encoding)

files = listdir(config['DEFAULT']['doc_dir_path'])

AVG_L = 0

for i in files:

root = ET.parse(config['DEFAULT']['doc_dir_path'] + i).getroot()

title = root.find('title').text

body = root.find('body').text

docid = int(root.find('id').text)

date_time = root.find('datetime').text

seg_list = jieba.lcut(title + '。' + body, cut_all=False)

ld, cleaned_dict = self.clean_list(seg_list)

AVG_L = AVG_L + ld

for key, value in cleaned_dict.items():

d = Doc(docid, date_time, value, ld)

if key in self.postings_lists:

self.postings_lists[key][0] = self.postings_lists[key][0] + 1 # df++

self.postings_lists[key][1].append(d)

else:

self.postings_lists[key] = [1, [d]] # [df, [Doc]]

AVG_L = AVG_L / len(files)

config.set('DEFAULT', 'N', str(len(files)))

config.set('DEFAULT', 'avg_l', str(AVG_L))

with open(self.config_path, ‘w’, encoding = self.config_encoding) as configfile:

config.write(configfile)

self.write_postings_to_db(config[‘DEFAULT’][‘db_path’])

if __name__ == "__main__":

im = IndexModule('../config.ini', 'utf-8')

im.construct_postings_lists()

|

图1. 布尔检索中的词项文档矩阵

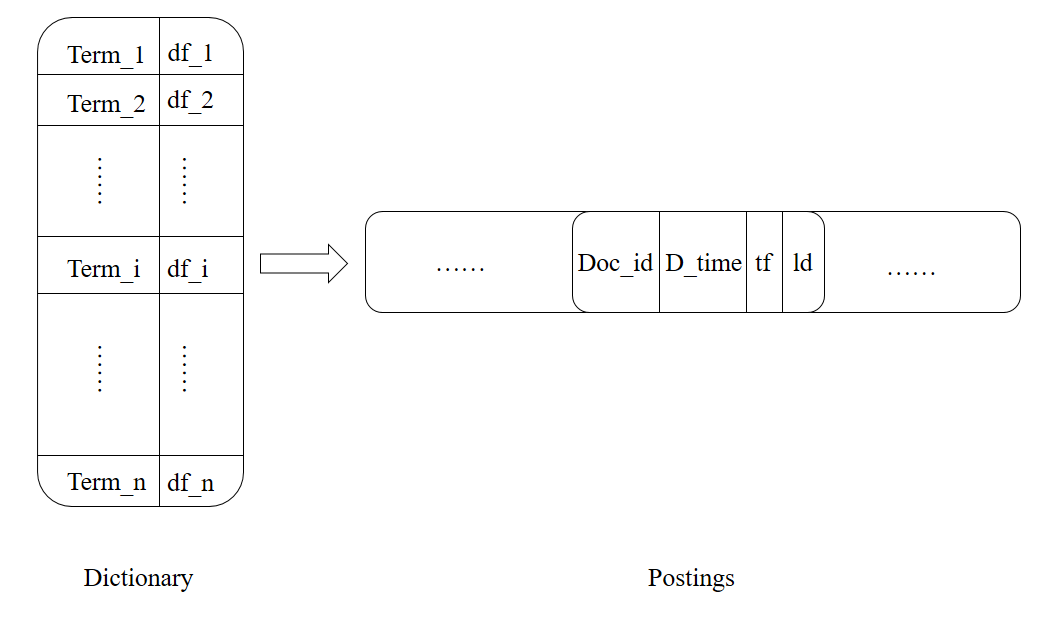

图1. 布尔检索中的词项文档矩阵 图2. 倒排索引结构图

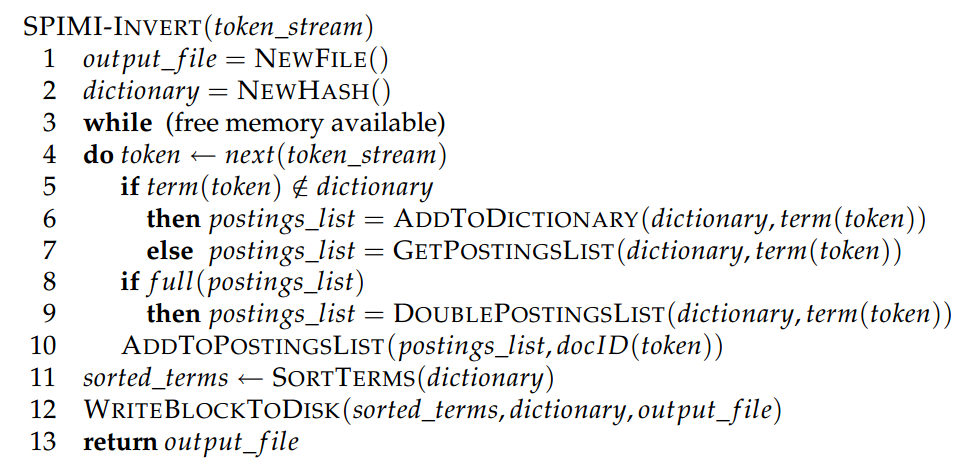

图2. 倒排索引结构图 图3. SPIMI算法伪代码

图3. SPIMI算法伪代码